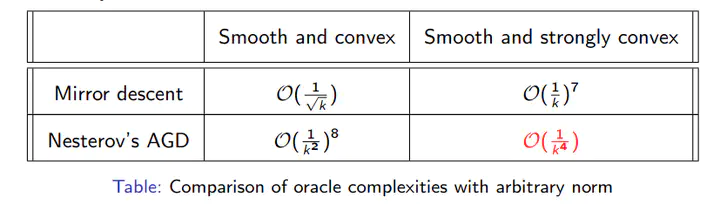

Minimax type of problems arise in several domains such as machine learning, optimization, statistics, communication, and game theory. However, a majority of results are established for the Euclidean norm due to its special self-dual nature. In this project, we propose Generalized Conceptual Dual Implicit Accelerated Gradient Descent (GC-DIAG) which is adapted from the Conceptual Dual Implicit Accelerated Gradient (C-DIAG) [ 1 ] for solving smooth minimax optimization problems $ \min_\mathbf{x}\max_\mathbf{y} g(\mathbf{x},\mathbf{y})$ where $ g(.,.)$ is smooth and $g(\mathbf{x},.) $ is concave for each $\mathbf{x}$ . We prove $\mathcal{O}(1/k^2)$ convergence rate for the primal dual gap using a potential-function based proof and Bregman Divergence framework. We also prove $\mathcal{O}(\frac{1}{k^4})$ convergence rate using Nesterov’s accelerated gradient descent and a restarting strategy, similar to [ 2 ], which is an improvement over $\mathcal{O}(\frac{1}{k})$ for smooth and strongly convex functions with respect to an arbitrary norm.